Appendix A – Cryptography & PKI

Several topics in this book involve cryptography and/or PKI (Public Key Infrastructure). IPsec (Internet Protocol layer security) includes both AH (Authentication Header) and ESP (Encapsulating Security Payload). AH is used to do sender authentication of packets, and detection of tampering. Instead of using a combination of message digest (e.g. SHA1) with asymmetric cryptography (e.g. RSA), AH uses a keyed message digest, also called Hashed Message Authentication Code (HMAC). ESP does symmetric key encryption of the payload part of an IP packet. The symmetric key for this can be manually distributed (referred to as “shared secret key”), or automatically exchanged in a secure manner using Internet Key Exchange (IKE). IKE uses an application of asymmetric key cryptography called the Diffie– Hellman Key Agreement algorithm, and a new kind of digital certificate called an IPsec Certificate to fully automate distribution of symmetric keys for IPsec.

The cryptographic mechanisms used in these IP security protocols are explained in this chapter. If you are not already familiar with them, you should review this chapter before trying to understand the IP security protocols themselves. There are some good books listed in the bibliography that cover these cryptographic mechanisms in considerably more detail, if this chapter is not sufficient for you. I spent two years with VeriSign creating and delivering training on just these topics, and took an entire week to cover the topics in this chapter. What follows is a very concise coverage of these topics.

A.1 – Cryptography Standards

The X.509 digital certificate is the foundation of PKI. It was originally specified as part of the ITU-T (formerly CCITT) X.500 standard for directory services. The public key certificate was specified in part of this larger standard, as fascicle X.509, “The Directory: Public-key and attribute certificate frameworks”, July 1998. What is in current use is version 3 of this specification. This standard has been incorporated into the RFC standard set as RFC 5280, “Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile”, May 2008. The standards related to PKI and cryptography based on X.509 certificates are overseen by the PKIX Working Group of the IETF.

Since X.500 was part of the OSI network standard, there are some artifacts of that origin in the X.509 certificate, such as distinguished names, for example:

cn=Lawrence Hughes, o=InfoWeapons Corp., ou=Administration, c=PH

RFC 5280 describes ways to integrate this OSI technology into TCP/IP, in addition to making all of the details of the certificate itself available to all for free. If this syntax looks familiar, it is also found in LDAP, which also was derived from the OSI X.500 technology.

Some of the concepts covered in this chapter were first defined in a set of standards from RSA called PKCS (Public Key Cryptography Standards). For example, S/MIME was first defined in PKCS #7. There were a number of PKCS standards, the most important of which were:

* PKCS #1 – RSA Cryptography Standard, now in RFC 3447, “Public-Key Cryptography Standards (PKCS) #1: RSA Cryptography Specifications Version 2.1”, February 2003.

* PKCS #3 – Diffie-Hellman Key Agreement Standard

* PKCS #5 – Password-based Encryption Standard, now in RFC 2898, “PKCS #5: Password-Based

Cryptography Specification Version 2.0”, September 2000

* PKCS #7 – Cryptographic Message Syntax Standard, now in RFC 2315, “PKCS #7: Cryptographic message Syntax Standard Version 1.5”, March 1998. The specifics of S/MIME, which is a large part of this standard have been refined in RFC 3851, “Secure/Multipurpose Internet Mail Extensions (S/MIME) Version 3.1 Message Specification”, July 2004; and RFC 3852, “Cryptographic Message Syntax (CMS)”, July 2008. The most recent specification of S/MIME is RFC 5751, “Secure/Multipurpose Internet Mail Extensions (S/MIME) Version 3.2 Message Specification”, January 2010.

* PKCS #8 – Private-Key Information Syntax Standard, now in RFC 5208, “Public-Key Cryptography

Standards (PKCS) #8: Private-Key Information Syntax Specification Version 1.2”, May 2008

* PKCS #10 – Certification Request Standard, now in RFC 2986, “PKCS #10: Certification Request

Syntax Specification Version 1.7”, November 2000

* PKCS #11 – Cryptographic Token Interface (Cryptoki)

* PKCS #12 – Personal Information Exchange Syntax Standard

* PKCS #15 – Cryptographic Token Information Format Standard, now ISO/IEC 7816-15.

The original PKCS standards are still available today if you are interested, but most of the important ones have been incorporated into the RFC standards track and continue to evolve there. However, even today, we often refer to creating a PKCS #10 Certificate Signing Request, using the PKCS #11 Cryptoki Application Program Interface to a cryptographic module, or exporting public and/or private keys securely in a PKCS #12 package.

A.2 – Cryptography, Encryption and Decryption

Cryptography involves scrambling information in such a way that all of the information is still present, but recoverable only by a recipient who has access to the scheme by which it can be unscrambled. In a very simple case, alphabetic substitution could map each letter of the alphabet to the letter 3 further along, so that A becomes D, B becomes E, etc. That is called an encryption algorithm. Here is the complete mapping from the letters in the message (the plaintext) to the encrypted letters (the ciphertext) for this scheme. To encrypt a message, you would take each letter (in the first line) and map it to the one below it (in the second line).

The word “HELLO” would become “KHOOR”. To unscramble this message, the recipient would need to know how to reverse the encryption (a decryption algorithm), which in this case would involve mapping each letter to the one three before each of the encrypted letters. So, D would become A, E would become B, etc. You could use the same two lines, but instead locate each letter from the ciphertext in the second line and replace it with the letter above it (in the first line). Hence “KHOOR” would become “HELLO”. In this case, the algorithm must be kept secret, because knowledge of it would allow anyone to decrypt the message. The security of this system depends on keeping the algorithm secret.

A.2.1 – Cryptographic Keys

The “shift by 3” encryption system is so simple that almost anyone could figure out how to crack a message encrypted with it in just a few minutes. To make it a stronger system, instead of always shifting by three characters, you could shift by n letters (where n could be any number from 0 to 25). The “shift by 3” scheme would be a special case of this “shift by N” scheme, where N = 3. In the “shift by N” scheme, the value of N is known as an encryption key. To decrypt a message, the recipient needs to know not only the decryption algorithm, but also the specific key used to encrypt a given message. One day, the sender might use N=7 (“HELLO” becomes “OLSSV”), but on the next, they might use N=12 (“HELLO” becomes “TQXXA”). The same plaintext results in different ciphertexts, depending on the key used.

In general, real encryption algorithms are key driven. The same algorithm can produce radically different ciphertexts, depending on which key is used. A key is really just an ordered string of bits. You might think of the encryption algorithm as a generalized machine, in which case a particular key would be like one of many possible cams, which control the operation of the machine (how it slices and dices the data). Replace the cam with a different one and the same machine slices and dices the data in a completely different way.

In fact, modern algorithms are designed in such a way that complete knowledge of the general algorithm itself can be made available to anyone, and it does not make it any easier for them to crack a message encrypted with a key that they do not know. In other words, the security of the system is entirely dependent on keeping the keys used (not the details of the algorithm) secret. Full details of most modern encryption algorithms are not only known, they are standardized, peer reviewed, and available to anyone that is interested. The more good cryptanalysts that have reviewed an algorithm, and pronounced it strong (difficult to crack), the better. It is not possible to prove than an algorithm is uncrackable, but you can build evidence that as yet, no one has managed to crack it (or at least, no one has admitted to being able to crack it).

A.2.2 – Symmetric Key Cryptography

In the “shift by N” system, the same key that was used to encrypt some plaintext is also used to decrypt the resulting ciphertext. This is analogous to your house key. You use the same key to lock your house when you leave, and to unlock it when you return. This is the defining characteristic of symmetric key cryptography which has been around for about 2,000 years. In comparison, with asymmetric key cryptography (invented only recently), one key of a related pair of keys is used to encrypt some plaintext, but only the other key the pair can decrypt the resulting ciphertext. Not even the key it was encrypted with can decrypt it. This is not as intuitive as symmetric key cryptography, but allows some remarkable things to be done.

Standards related to symmetric key cryptography include:

* FIPS PUB 46 – Data Encryption Standard (January 1977)

* FIPS PUB 46-1 – Data Encryption Standard (January 1988)

* FIPS PUB 46-2 – Data Encryption Standard (December 1993)

* FIPS PUB 46-3 – Data Encryption Standard (October 1999) (includes Triple DES)

* FIPS PUB 197 – Advanced Encryption Standard (November 2001)

* RFC 3565, “Use of the Advanced Encryption Standard (AES) Encryption Algorithm in Cryptographic Message Syntax”, July 2003

* RFC 3566, “The AES-XCBC-MAC-96 Algorithm and Its Use With IPsec”, September 2003

* RFC 3602, “The AES-CBC Cipher Algorithm and Its Use with IPsec”, September 2003 A.2.3 – Cryptanalysis

The algorithm just described (“shift by N”) could be known by the recipient in advance, but somehow the sender would have to let the recipient know which key (which value of N) was used to encrypt a given message. There are only 26 possible keys (0 to 25), which would only take 5 bits to represent (actually 5 bits would allow up to 32 possible keys). A key length of 5 bits is not very secure. It would not take long to try every possible key, and chances are, only one of the possible values would result in an English text. If the message was longer, or the same key was used for all messages on a given day, once a third party figured out the key being used, they could easily decrypt the rest of the message, or all of the messages encrypted with it.

Note that in the “shift by N” system, the key N=0 is a weak key, as the ciphertext is identical to the plaintext. Real encryption algorithms may have weak keys as well. DES has 16 known weak keys, which you have to check for when you generate a random DES key (although the probability of creating one is quite low). It is amazing that after all the slicing and dicing that DES does on the binary level, the result (using a weak key) could be exactly what you started with, but that is the way it works.

Cracking secure messages (being able to recover the plaintext of an encrypted message without having access to the correct key) is called cryptanalysis. There are many techniques used to cryptanalyse encrypted messages. For example, with alphabetic substitution schemes, letter frequencies can help you identify the encrypted characters for the most common letters (the character that appears most often in the ciphertext is probably the code for E, etc). With modern algorithms there are other techniques. With DES, a technique called differential cryptography was used to determine its real strength only justified a 56-bit key – anything longer would only give the illusion of more strength. Sometimes cryptanalysts can completely cryptanalyse a new proposed algorithm, which means it is cracked, and messages can be decrypted without knowledge of the keys used. You might think of cryptography as building secure safes and cryptanalysis as safe cracking. Cryptology is the science that includes both cryptography and cryptanalysis. RSA was founded by two cryptographers and one cryptanalyst. The cryptographers proposed one asymmetric key system after another, then the cryptanalyst would analyze and break them. The cryptographers would then try to design one more difficult to break, based on how the previous system was broken. When an algorithm remained unbroken after quite an effort, they released it as the RSA algorithm. Since then, many other cryptanalysts have tried to break it, with no success. The RSA algorithms is now specified in RFC 4055, “Additional Algorithms and Identifiers for RSA Cryptography for use in the Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile”, June 2005.

The “shift by N” encryption scheme can be implemented with two disks or two rings, each of which has the entire alphabet written on them, but one of which can be rotated to put A on the plaintext ring next to any letter on the ciphertext ring. Various Radio and TV shows (as well as Cereal manufacturers) have included these cipherdisks as premiums over the years. It is a real (but not very strong) cryptographic system, complete with the concept of a key.

A Secret Decoder Ring

From Wikipedia Creative Commons

A.2.4 – Cryptographic Strength

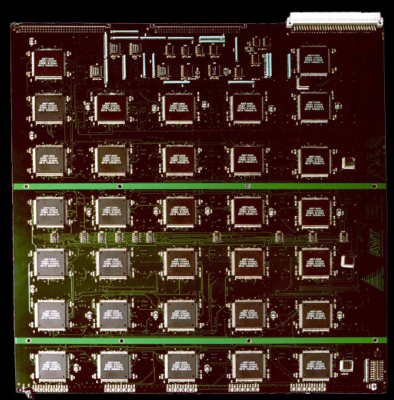

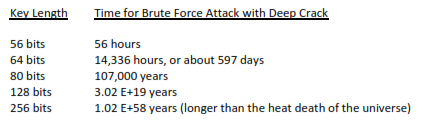

Modern real world cryptographic systems don’t do just alphabetic substitution, or work on just ASCII text. They accept a block of binary data as input, and scramble information in very complex ways at the bit level. The output is also a block of binary data. A scheme like the DES (Data Encryption Standard) has a block size of 64 bits, using a 56-bit binary key, producing a 64 bit binary ciphertext. With 56-bit keys, there are 256 (about 7.2 E+16) possible keys. Trying every possible key is known as the brute force attack. When DES was released in 1975, that number of keys was effectively considered infinite. However, in 1998, a group at the Electronic Frontier Foundation built a machine called Deep Crack with extensive hardware parallelism that could try roughly a million DES keys every microsecond. At this rate, it took about 56 hours to try all possible keys.

RSA ran a “DES challenge” that involved decrypting a plaintext containing an English phrase in ASCII characters. There were several “crowd computing” attacks, which divided the entire key space into millions of chunks, which individuals could download and try to find the right key among. These typically took several months to locate the valid key, even with tens of thousands of people searching for it. With Deep Crack the problem was recognizing when it had found the right key. Most decryptions with a wrong key resulted in non-ASCII binary gibberish, which could be discarded. Another computer checked the relatively few decryptions that resulted in ASCII text to see if it contained any English words. If so, a human was alerted, who could determine if a valid English phrase was recovered. After Deep Crack, DES was considered no longer “strong”. The assumption was that if a few people could create such a machine with a budget of about $200,000 that at least some governments (and certainly the U.S. NSA) probably already had and used such machines.

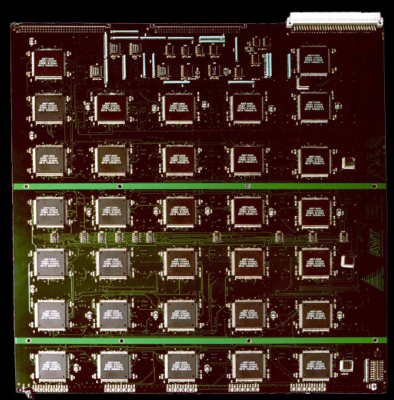

One of the circuit boards from Deep Crack, with multiple DES chips (from Wikipedia Creative Commons)

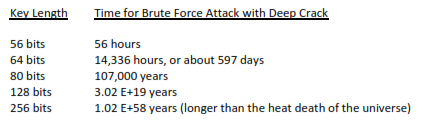

Each additional bit of key length doubles the time that Deep Crack would take to find a key, so here is a table of how long it would take for various common key lengths:

As you can see, with a symmetric key algorithm, the time required for a brute force attack quickly increases with increasing key length to totally impractical time scales. Even 128 bit keys are probably sufficient for most purposes. With 256 bit keys the time is greater than the expected life of the universe.

The most common symmetric key algorithm in use today is the AES (Advanced Encryption Standard) which replaced DES in 2000. It is very fast, and can use either 128 or 256 bit binary keys. There are currently no known attacks against AES that are faster than brute force. Several algorithms were entered in a competition to replace DES as the new standard, including TwoFish (successor to BlowFish) and Serpent. The algorithm that won (and is now known as AES) was called Rijndael (prounounced RAIN- dell). There are no royalties required for use, but many countries have laws restricting use of strong cryptography.

A.2.6 – Key Management

Whether you are using the “shift by n” scheme or AES, the sender must somehow securely communicate the symmetric key they used to the message recipient. This is not easy to do, as if it is sent via any plaintext channel (e.g. spoken over a telephone call, sent by fax, etc), then the easiest way to attack the system is to intercept the key. Systems based on symmetric key cryptography alone have very complex key management systems, and these are usually the weakest link of any such system. Kerberos is an example of a cryptographic system based entirely on symmetric key cryptography (actually DES).

A.3 – Message Digest

Another cryptographic algorithm (message digest) does not include the entire contents of the input text in the output, even in scrambled form. It is not possible to recover the plaintext from a generated message digest. It is a one way algorithm. In fact, regardless of how large the input document is, the output is a fixed length (for a given message digest algorithm). For example, the MD5 message digest algorithm produces a 128 bit output, SHA1 produces a 160 bit output, and SHA-256 produces a 256 bit output. The output characterizes the input document. Any change at all in the input document is amplified by the message digest algorithm. Even a single bit difference in the input would result in a totally different output. This makes it very good for determining if two documents are identical (or the same document at two different points in time). If a document is modified in any way at all (changing, deleting or adding characters or bits), the generated message digest will be different after the modification. It is like an extremely sensitive checksum. The term Message Authentication Code (MAC) is also sometimes used for Message Digest (not to be confused with the network term MAC, which refers to the Media Access Control layer, or MAC Addresses).

The following RFCs define Message Digest Algorithms:

* RFC 1321, “The MD5 Message-Digest Algorithm”, April 1992

* RFC 3174, “US Secure Hash Algorithm 1 (SHA1)”, September 2001

* RFC 3874, “A 224-bit One-way Hash Function: SHA-224”, September 2004

* RFC 4634, “US Secure Hash Algorithms (SHA and HMAC-SHA)”, July 2006

Note that RFC 4634 includes definition of the newer SHA-224, SHA-256, SHA-384 and SHA-512.

A.4 – Asymmetric Key Cryptography

In the early 1970s, some English cryptographers at GCHQ (James Ellis, Clifford Cocks and Malcolm Williamson) invented an entirely new type of cryptography, which was kept classified and not revealed until 1997. It was re-invented independently in 1976 by two researchers named Whitfield Diffie and Martin Hellman (based on work by Ralph Merkle). This scheme is called asymmetric key cryptography, and it involves using not one key, but a related pair of keys. If you encrypt something with one of the keys, only the other key of a pair will decrypt the ciphertext produced. Even the key used to encrypt the plaintext will not correctly decrypt the ciphertext. Later, three mathematicians from M.I.T. named Ron Rivest, Adi Shamir and Leonard Adleman created a company called RSA that was the first to commercially exploit this concept. Their asymmetric key algorithm (also called RSA) is still in extensive use today.

All asymmetric key algorithms depend on some very hard mathematical problem that is far faster to do in one direction (e.g. multiplying two large prime numbers together) than the other direction (e.g. factoring a giant product of two primes into the two prime numbers). The mathematical problems are referred to as trap door functions (because a trap door allows things to go easily in one direction, but not in the other). The fast direction (e.g. multiplying two giant primes) is used when you generate a pair of matched keys, but deriving the private key from the public key involves working the problem in the reverse direction (factoring the product of the two primes). The time required to do this increases greatly with the length of the product. A product that is 512 bits long (about 154 decimal digits) is considered weak. A product that is 1024 bits long (about 308 decimal digits) is considered fairly strong. A product that is 2048 bits (about 616 decimal digits) or longer is considered very strong. As computing power increases (and factoring theory improves) year by year, what was once considered strong is later considered weak, so key lengths must slowly increase. For example, cracking a 512 bit RSA key is estimated to take about 7,000 MIP-years to accomplish. In 1979, the Motorola 68000 ran at about 1 MIPS, so it would take 7,000 years for one machine (or 70 years for 100 machines) to break one 512 bit key. In 2000, the AMD Athlon ran at about 3500 MIPS, so it would take about 2 years to do the same thing. The most recent Intel Core i7 (i980EE) runs at about 147,000 MIPS, so one machine with this CPU would be able to crack a 512 bit key in about 18 days. Such increases will eventually result in having to use impractically long keys with RSA. Fortunately, a newer asymmetric key algorithm called Elliptic Curve Cryptography (ECC) has better characteristics (strength goes up more rapidly with increasing key length than it does with RSA). At some point, as computing power increases, cryptographic systems will have to switch over to using ECC. The standards already support it.

There is also an asymmetric key algorithm called the Digital Signature Algorithm (DSA), created by the U.S. NSA (National Security Agency). DSA is part of the Digital Signature Standard (DSS) which is specified in FIPS 186. The DSS was adopted in 1996 as FIPS 186-1, and then expanded in 2000 as FIPS 186-2, and again in 2009 as FIPS 186-3. There is no general encryption algorithm (e.g. for digital envelope) associated with DSA – it is just for digital signatures.

Let’s say Alice creates two keys. She publishes one of them (called her public key) to the entire world. The other one (called her private key), she never shows to anyone. Anyone in the world can obtain and use her public key to encrypt things, but she is the only one than can decrypt things encrypted with her public key. So if she wanted to send something securely to Bob, she would obtain Bob’s public key (even through insecure channels – it does not matter who sees it). She would encrypt her message using Bob’s public key, and send the encrypted message to him. Anyone in the world would be able to send Bob such a message, because all they need is his public key. He would use his private key to decrypt it. He is the only person in the world who could do this (assuming no one has discovered his private key). This provides complete privacy, without having to do complex and insecure symmetric key distribution.

A.4.1 – Digital Envelopes

Compared with symmetric key cryptography, asymmetric key cryptography is very slow (in terms of how many bytes per second can be encrypted), so it is not commonly used to encrypt entire long messages.

It is usually used just to encrypt a short (e.g. 128 bit) symmetric session key or a short (e.g. 160 bit) message digest. So, in a real system (such as S/MIME e-mail), Alice would generate a random symmetric session key (just for this e-mail message, never to be used again), encrypt the message with it (using AES), then encrypt the symmetric session key and Bob’s public key (using RSA). She would send both the encrypted message and the encrypted session key to Bob. He would first decrypt the session key with his private key (using RSA), then use the recovered session key to decrypt the message (using AES). This is called creating a digital envelope. A digital envelope provides privacy. Asymmetric key cryptography solves the problem of how to securely distribute symmetric keys.

A.4.2 – Digital Signatures

Asymmetric key cryptography is also useful for creating digital signatures, when used in combination with a message digest algorithm. As an example, to produce a digital signature of a document, Alice first produces a message digest of the message (using SHA1) then encrypt the message digest with her private key (using RSA). The result would be encoded into ASCII and appended to the message. She would send the message including the digital signature to Bob. Bob would strip off the digital signature, and produce a new message digest of the message (using SHA1). He would also decrypt the encrypted message digest with Alice’s public key (using RSA). He would then compare the recovered message digest with the newly generated message digest. If they match, then the digital signature is valid. If they don’t match then either the message was signed using someone else’s private key (hence was probably sent by someone else), or the message had been tampered with along the way. In either case, the message should not be accepted as valid. A digital signature provides sender authentication (knowing for certain that it was sent by the person it appears to be from), and message integrity (being able to detect any possible change to the message). These are two very useful things to know about a message.

A.4.3 – Combined Digital Signature and Digital Envelope

By itself, a digital signature does not provide privacy. You may not care who reads a message, but you want to know for certain who it came from, and whether or not it has been tampered with along the way. In this case, only a digital signature is needed. If you also want privacy, then you must both digitally sign and then digitally envelope the message. There is no conflict between the two processes. S/MIME e-mail allows you to do neither, either or both. They are completely independent processes (although if combined, the digital signature must be done first and checked last).

A.4.4 – Public Key Management and Digital Certificates

When Alice sends Bob a digitally signed message, she has all the keys she needs (her own private key) at the time she composes the message. When he is reading the message, Bob needs Alice’s public key, which she can simply append to the message. If Alice appended her key, Bob can use the appended public key to check the signature. If she didn’t, Bob can obtain her public key in various other ways, perhaps she published her key on a website via HTTP, or in an LDAP directory.

When Alice sends Bob a digitally enveloped message, she needs Bob’s public key, which she may not have. Bob might have published it on his website, or in an LDAP directory, or he could simply send Alice a digitally signed message first. Many secure e-mail systems save the public keys of the senders of all digitally signed messages in case you want to later send them digitally enveloped messages. When Bob receives a digitally enveloped message, he has all the keys he needs to open it (his own private key).

Regardless of how Bob obtained Alice’s public key, how does he know for certain that the key really belongs to Alice? In general, anytime you use a public key, you must determine if it is the real public key for that user. If Charlie can trick Bob into using his public key to check what he thinks is Alice’s signature, Charlie can sign the message using his own private key and Bob will think it is a valid signature from Alice (this is called the public key substitution threat).

A public key must be distributed embedded in a digitally signed document that includes other identifying information, such as the key owner’s name, email address, expiration date, etc. This is called a public key digital certificate. It should be produced and signed by a trusted third party (someone other than Alice and Bob, but who