instructions per second (or one divided by 2 billionths). The CPU is then said to operate at 500 MIPS or 500 million of instructions per second. In the world of personal computers, one commonly refers to the rate at which the CPU can process the simplest instruction (i.e. the clock rate). The CPU is then rated at 500 MHz (megahertz) where mega indicates million and Hertz means “times or cycles per second”. For powerful computers, such as workstations, mainframes and supercomputers, a more complex instruction is used as the basis for speed measurements, namely the so-called floating-point operation. Their speed is therefore

measured in megaflops ( million of floating-point operations per second) or, in the case of very fast computers, teraflops (billions of flops).

In practice, the speed of a processor is dictated by four different elements: the “clock speed”, which indicates how many simple instructions can be executed per second; the word length, which is the number of bits that can be processed by the CPU at any one time (64 for a

Pentium IV chip); the bus width, which determines the number of bits that can be moved

simultaneously in or out of the CPU; and then the physical design of the chip, in terms of the layout of its individual transistors. The latest Pentium processor has a clock speed of about 4

GHz and contains well over 100 million transistors. Compare this with the clock speed of 5

MHz achieved by the 8088 processor with 29 000 transistors!

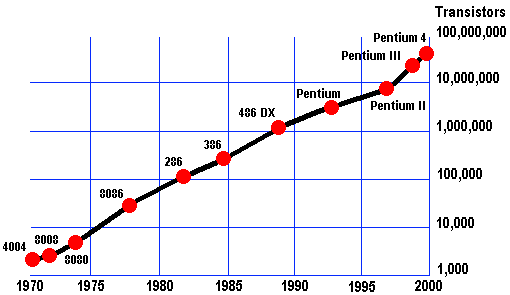

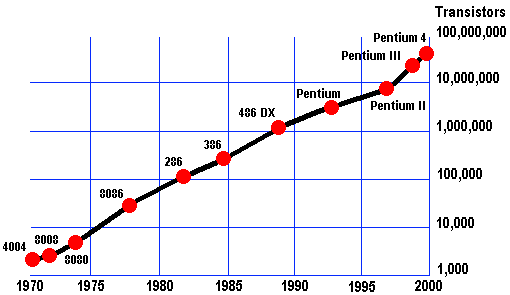

Moore’s Law (see Figure 4-2) states that processing power doubles for the same cost

approximately every 18 months.

40 [Free reproduction for educational use granted]

© Van Belle, Eccles & Nash

Section II

4. Hardware

Figure 4-2. Illustration of Moore’s Law

4.2.4 Von Neumann versus Parallel CPU Architecture

The traditional model of the computer has one single CPU to process all the data. This is called the Von Neumann architecture because he engineered this approach to computers in the days when computers were still a dream.

Except for entry-level personal computers, most computers now have two, four, or up to

sixteen CPUs sharing the main processing load, plus various support processors to handle maths processing, communications, disk I/O, graphics or signal processing. In fact many CPU

chips now contain multiple “cores” each representing an individual CPU.

Some super-computers that have been designed for massive parallel processing, have up to 64,000 CPUs. These computers are typically used only for specialised applications such as weather forecasting or fluid modelling. Today’s supercomputers are mostly clusters (tight networks) of many thousands of individual computers.

4.2.5 Possible Future CPU Technologies

Perhaps the major future competitor of the microchip-based microprocessor is optical computing. Although the technology for developing electronic microchips suggests that CPUs will continue to increase in power and speed for at least the next decade or so, the physical limits of the technology are already in sight. Switching from electronic to light pulses offers a number of potential advantages: light (which consists of photons) can travel faster, on

narrower paths and does not disperse heat. In theory, one can even process different signals (each with a different light frequency) simultaneously using the same channel. Although the benefits of optical processing technology have already been proven in the areas of data

storage (CD-Rom, CD-R) and communication (fibre optics), the more complex all-optical

switches required for computing are still under development in the research laboratories.

A very experimental alternative to optical and electronic technologies is the organic computer. Research indicates that, for certain applications, it is possible to let a complex organic molecule act as a primitive information processor. Since even a tiny container filled Discovering Information Systems

41

4. Hardware

Section II

with the appropriate solutions contains many trillions of these molecules, one obtains in effect a hugely parallel computer. Although this type of computer can attack combinatorial

problems way beyond the scope of traditional architectures, the main problem is that the programming of the bio-computer relies entirely on the bio-chemical properties of the

molecules.

Another exciting but currently still very theoretical development is the possible use of quantum properties as the basis for a new type of computer architecture. Since quantum states can exist in juxtaposition, a register of qubits (a bit value in quantum state) takes on all the possible values simultaneously until it is measured. This could be exploited to speed up extremely parallel algorithms and would affect such areas as encryption, searching and error-correction. To date, experimental computers with a few qubits have been built but the

empirical validation of the actual usefulness of quantum computing still remains an open question.

4.3 Main Memory

The function of main memory (also referred to as primary memory, main

storage or internal storage) is to provide temporary storage for

instructions and data during the execution of a program. Main memory is

usually known as RAM, which stands for Random Access Memory.

Although microchip-based memory is virtually the only technology used by today’s

computers, there exist many different types of memory chips.

4.3.1 Random Access Memory (RAM)

RAM consists of standard circuit-inscribed silicon microchips that contain many millions of tiny transistors. Very much like the CPU chips, their technology follows to the so-called law of Moore, which states that they double in capacity or power (for the same price) every 18

months. A RAM chip easily holds hundreds of Megabytes (million characters). They are frequently pre-soldered in sets on tiny memory circuit boards called SIMMS ( Single In-line Memory Modules) or DIMMS (Dual …) which slot directly onto the motherboard: the main circuit board that holds the CPU and other essential electronic elements. The biggest

disadvantage of RAM is that its contents are lost whenever the power is switched off.

There are many special types of RAM and new acronyms such as EDO RAM, VRAM etc. are

being created almost on a monthly basis. Two important types of RAM are:

§ Cache memory is ultra-fast memory that operates at the speed of the CPU. Access to normal RAM is usually slower than the actual operating speed of the CPU. To avoid

slowing the CPU down, computers usually incorporate some more expensive, faster cache RAM that sits in between the CPU and RAM. This cache holds the data and programs that are needed immediately by the CPU. Although today’s CPUs already incorporate an

amount of cache on the circuit itself, this on-chip cache is usually supplemented by an

additional, larger, cache on the motherboard.

§ Flash RAM or flash memory consists of special RAM chips on a separate circuit board within a tiny casing. It fits into custom ports on many notebooks, hand-held computers

42 [Free reproduction for educational use granted]

© Van Belle, Eccles & Nash

Section II

4. Hardware

and digital cameras. Unlike normal RAM, flash memory is non-volatile i.e. it holds it

contents even without external power, so it is also useful as a secondary storage device.

4.3.2 Read-Only Memory (ROM)

A small but essential element of any computer, ROM also consists of electronic memory

microchips but, unlike RAM, it does not lose its contents when the power is switched off. Its function is also very different from that of RAM. Since it is difficult or impossible to change the contents of ROM, it is typically used to hold program instructions that are unlikely to change during the lifetime of the computer. The main application of ROM is to store the so-called boot program: the instructions that the computer must follow just after it has been switched on to perform a self-diagnosis and then tell it how load the operating system from secondary storage. ROM chips are also found in many devices which contain programs that

are unlikely to change over a significant period of time, such as telephone switch boards, video recorders or pocket calculators. Just like RAM, ROM comes in a number of different forms:

§ PROM (Programmable Read-Only Memory) is initially empty and can be custom-programmed once only using special equipment. Loading or programming the contents of ROM is called burning the chip since it is the electronic equivalent of blowing tiny

transistor fuses within the chip. Once programmed, ordinary PROMs cannot be modified

afterwards.

§ EPROM ( Erasable Programmable Read-Only Memory) is like PROM but, by using special equipment such as an ultra-violet light gun, the memory contents can be erased so that the EPROM can be re-programmed.

§ EEPROM ( Electrically Erasable Programmable Read-Only Memory) is similar to EPROM but it can be re-programmed using special electronic pulses rather than ultra-violet light so no special equipment is required.

4.4 Secondary Storage Devices

Since the main memory of a computer has a limited capacity, it is necessary to retain data in secondary storage between different processing cycles. This is the medium used to store the program instructions as well as the data required for future processing. Most secondary

storage devices in use today are based on magnetic or optical technologies.

4.4.1 Disk drives

The disk drive is the most popular secondary storage device, and is found in both mainframe and microcomputer environments. The central mechanism of the disk drive is a flat disk, coated with a magnetisable substance. As this disk rotates, information can be read from or written to it by means of a head. The head is fixed on an arm and can move across the radius of the disk. Each position of the arm corresponds to a “track” on the disk, which can be visualised as one concentric circle of magnetic data. The data on a track is read sequentially as the disk spins underneath the head. There are quite a few different types of disk drives.

Discovering Information Systems

43

4. Hardware

Section II

In Winchester hard drives, the disk, access arm and read/write heads are combined in one single sealed module. This unit is not normally removable, though there are some models

available where the unit as a whole can be swapped in and out of a specially designed drive bay. Since the drives are not handled physically, they are less likely to be contaminated by dust and therefore much more reliable. Mass production and technology advances have

brought dramatic improvements in the storage capacity with Terabyte hard drives being state of the art at the end of 2006. Current disk storage costs as little R1 per gigabyte.

Large organisations such as banks, telcos and life insurance companies, require huge amounts of storage space, often in the order of many terabytes (one terabyte is one million megabytes or a trillion characters). This was typically provided by a roomful of large, high-capacity hard drive units. Currently, they are being replaced increasingly by redundant arrays of

independent disks (RAIDs). A RAID consists of an independently powered cabinet that contains a number (10 to 100) of microcomputer Winchester-type drives but functions as one single secondary storage unit. The advantage of the RAID is its high-speed access and

relatively low cost. In addition, a RAID provides extra data security by means of its fault-tolerant design whereby critical data is mirrored (stored twice on different drives) thus providing physical data redundancy. Should a mirrored drive fail, the other drive steps in automatically as a backup.

A low-cost, low-capacity version of the hard disk was popularised by

the microcomputer. The diskette consists of a flexible, magnetic

surface coated mylar disk inside a thin, non-removable, plastic

sleeve. The early versions of the diskette were fairly large (8” or

5¼”) and had a flexible sleeve, hence the name floppy diskette. These

have rapidly been replaced by a diskette version in a sturdier sleeve, the

stiffy disk, that despite its smaller size (3 ½”) can hold more data. Although the popular IBM

format only holds 1,44 megabytes, a number of manufacturers have developed diskette drives that can store from 100 to 250 megabytes per stiffy. An alternative development is the

removable disk cartridge, which is similar in structure to an internal hard drive but provides portability, making it useful for backup purposes.

4.4.2 Magnetic tape

While disk and optical storage have overtaken magnetic tape as

the most popular method of storing data in a computer, tape is still

used occasionally – in particular for keeping archive copies of

important files.

The main drawback of magnetic tape is that it is not very efficient for accessing data in any way other than strictly sequential order. As an illustration, compare a CD player (which can skip to any track almost instantly) with a music tape recorder (which has to wind the tape all the way through if one wants to listen to a song near the end). In computer terms, the ability to access any record, track, or even part within a song directly is called the direct access method. In the case of the tape recorder one may have to wind laboriously through the tape until one reaches the song required – this is referred to as the sequential access method.

44 [Free reproduction for educational use granted]

© Van Belle, Eccles & Nash

Section II

4. Hardware

The high-density diskette and recordable optical disk have all but eroded the marginal cost advantage that tape storage enjoyed. This technology is therefore disappearing fast.

4.4.3 Optical disk storage

Optical disks, on the other hand, are rapidly becoming the storage medium of choice for the mass distribution of data/programs and the backup of data. Similar

to disk storage, information is stored and read from a circular disk.

However, instead of a magnetic read head, a tiny laser beam is

used to detect microscopic pits burnt onto a plastic disk coated

with reflective material. The pits determine whether most of the

laser light is reflected back or scattered, thus making for a binary

“on” or “off”. In contrast to hard disks, data is not stored in

concentric cylinders but in one long continuous spiral track.

Trivial fact: The spiral track used to store data on a CD is over six kilometres long.

A popular optical disk format is the 12-cm CD-ROM. The widespread use of music compact discs has made the technology very pervasive and cheap. Production costs for a CD-ROM are less than R1, even for relatively small production volumes. The drive reader units themselves have also dropped in price and are now hardly more than the cost of a diskette drive. A

standard CD-ROM can store 650 megabytes of data and the data can be transferred at many

megabytes per second, though accessing non-sequential data takes much longer.

The CD-ROM is a read-only medium. Data cannot be recorded onto the disk. The low cost

and relatively large capacity makes the CD-ROM ideally suited to the distribution of

software. They are also ideal for the low-cost distribution of large quantities of information such as product catalogues, reference materials, conference proceedings, databases, etc. It is indispensable for the storage of multimedia where traditional textual information is

supplemented with sound, music, voice, pictures, animation, and even video clips.

The limitation of the read-only format lead to the development of low-cost recordable optical disks. The compact disk recordable (CD-R) is a write-once, read-many (WORM) technology.

The CD-R drive unit takes a blank optical disk and burns data onto it using a higher-powered laser. This disk can then be read and distributed as an ordinary CD-ROM, with the advantage that the data is non-volatile i.e. permanent. The rapid drop in the cost of drive units and blank recording media (less than R2 per CD-R) is making this a very competitive technology for data backup and small-scale data distribution.

Although the 650 megabytes initially seemed almost limitless, many multimedia and video

applications now require more storage. A new format, the Digital Video Data (DVD) standard increased the capacity of the CD-ROM by providing high-density, double-sided and double-layered CDs. By combining the increased storage capacity with sophisticated data

compression algorithms, a DVD disc can easily store 10 times as much as a CD, sufficient for a full-length high-quality digital motion picture with many simultaneous sound tracks.

Even the DVD is not sufficient storage capacity and currently two optical technologies have Discovering Information Systems

45

4. Hardware

Section II

been developed to increase storage capacity even further. The basic specification of both HD-DVD and Blu-Ray provide for more than 25 GB of storage on a disc although multi-layer

Blu-Ray discs with capacities of more than 200 GB have already been developed.

A promising research area involves the use of holographic disk storage whereby data is stored in a three-dimensional manner. Though in its infancy, early prototypes promise a many-fold increase in storage capacity and it could become the answer to the ever increase storage requirements of the next decade

Device

Access Speed

Capacity

Cost

RAM

< 2 nanosec

256 MB (chip)

<R1/MB

Tape

serial only

500 MB-4 GB

<10c/MB

Diskette (3 ½”)

300 ms

1,44 MB

R1/MB

PC hard disk

10 ms

40-750 GB

<2c/MB

M/F hard disk

25 ms

100+ GB

R2/MB

CD-ROM

< 100 ms

660 MB

<0.1c/MB

CD-R

< 100 ms

660 MB

<0.2c/MB

DVD

< 100 ms

8 GB

<0.1c/MB

HD-DVD

< 100 ms

30 GB

?

Blu-Ray

< 100 ms

25 GB-200GB

?

Figure 4-3: Comparison of secondary storage devices

4.5 Output Devices

The final stage of information processing involves the use of output devices to transform computer-readable data back into an information format that can be processed by humans. As with input devices, when deciding on an output device you need to consider what sort of

information is to be displayed, and who is intended to receive it.

One distinction that can be drawn between output devices is that of hardcopy versus softcopy devices. Hardcopy devices (printers) produce a tangible and permanent output whereas

softcopy devices (display screens) present a temporary, fleeting image.

4.5.1 Display screens

The desk-based computer screen is the most popular output device. The standard monitor works on the same principle as the normal TV tube: a “ray” gun fires

electrically charged particles onto a specially coated tube (hence the

name Cathode-Ray Tube or CRT). Where the particles hit the coating,

the “coating” is being “excited” and emits light. A strong magnetic field

guides the particle stream to form the text or graphics on your familiar

monitor.

CRTs vary substantially in size and resolution. Screen size is usually measured in inches diagonally across from corner to corner and varies from as little as 12 or 14 inches for standard PCs, to as much as 40+ inches for large demonstration and video-conferencing

screens. The screen resolution depends on a number of technical factors.

46 [Free reproduction for educational use granted]

© Van Belle, Eccles & Nash

Section II

4. Hardware

A technology that has received much impetus from the fast-growing laptop and notebook

market is the liquid crystal display (LCD). LCDs have matured quickly, increasing in resolution, contrast, and colour quality. Their main advantages are lower energy requirements and their thin, flat size. Although alternative technologies are already being explored in research laboratories, they currently dominate the “flat display” market.

Organic light-emitting diodes (OLED) can generate brighter and faster images than LED

technology, and require thinner screens, but they have less stable colour characteristics, making them more suitable for cellular telephone displays than for computers.

Another screen-related technology is the video projection unit. Originally developed for the projection of video films, the current trend towards more portable LCD-based lightweight projectors is fuelled by the needs of computer-driven public presentations. Today’s units fit easily into a small suitcase and project a computer presentation in very much the same way a slide projector shows a slide presentation. They are rapidly replacing the flat transparent LCD

panels that needed to be placed on top of an overhead projection unit. Though the LCD panels are more compact, weigh less and are much cheaper, their image is generally of much poorer quality and less bright.

4.5.2 Printers and plotters

Printers are the most popular output device for producing permanent, paper-based computer output. Although they are all hardcopy devices, a distinction can be made between impact and non-impact printers. With impact printers, a hammer or needle physically hits an inked ribbon to leave an ink impression of the desired shape on the paper. The advantage of the impact printer is that it can produce more than one simultaneous copy by using carbon or chemically-coated paper. Non-impact printers, on the other hand, have far fewer mechanically moving parts and are therefore much quieter and tend to be more reliable.

The following are the main types of printers currently in use.

§ Dot-matrix printers used to be the familiar low-cost printers connected to many personal computers. The print head consists of a vertical row of needles each of which is

individually controlled by a magnet. As the print head moves horizontally across the

paper, the individual needles strike the paper (and ribbon

in between) as directed by the control mechanism to

produce text characters or graphics. A close inspection of a

dot-matrix printout will reveal the constituent dots that

make up the text. Although it is one of the cheapest printer options, its print quality is generally much lower that that of laser and ink-jet printers. However, today’s models are quick and give a much better quality by increasing the number of needles.

§ Laser printers are quickly growing in market share. They work on the same principle as the photocopier. A laser beam, toggled on and off very quickly, illuminates selected areas on a photo-sensitive drum, where the light is converted into electrical charge. As the drum rotates into a “bed” of carbon particles (“toner”) with the opposite charge, these particles will adhere to the drum. The blank paper is then pressed against the drum so that the

Discovering Information Systems

47

4. Hardware

Section II

particles “rub off” onto the paper sheet. The sheet then passes

through a high-temperature area so that the carbon particles are

permanently fused onto the paper. Current high-end laser

printers can cope with extremely large printing volumes, as

is required e.g. by banks to print their millions of monthly

account statements. The laser technology continues to

develop in tandem with photocopier technology. Laser printers can now handle colour

printing, double-sided printing or combine with mail equipment to perforate, fold, address and seal automatically into envelopes. At the lower end of the scale are the low-cost